No free lunch

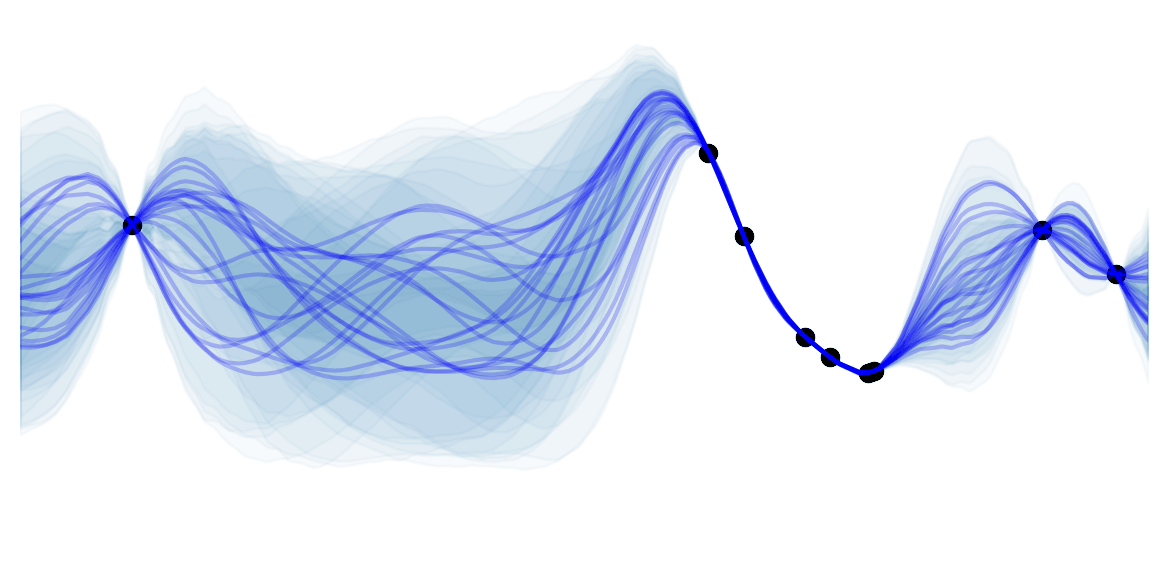

There is no one model that works best for every problem.

Let's try predicting the next fruit in the sequence:

You would probably say ![]() right ? Maybe with a lower probability you would say

right ? Maybe with a lower probability you would say ![]() . But have you thought of saying

. But have you thought of saying ![]() ? I doubt it. I never told you that the sequence was constrained in the type of fruit, but naturally we make assumptions that the data "behaves well". The point here is that without any knowledge/assumptions on the data, all future data are equally probable.

? I doubt it. I never told you that the sequence was constrained in the type of fruit, but naturally we make assumptions that the data "behaves well". The point here is that without any knowledge/assumptions on the data, all future data are equally probable.

The theorem builds on this, and states that every algorithm has the same performance when averaged over all data distributions. So the average performance of a deep learning classifier is the same as random classifiers.

![]() Side Notes :

Side Notes :

- You will often hear the name of this theorem when someone asks a question starting with "what is the best […] ?".

- In the real world, things tend to "behave well". They are for example often (locally) continuous. In such settings some algorithms are definitely better than others.

- Since the theorem publication in 1996, other methods have kept the lunch metaphor. For example: kitchen sink algorithms, random kitchen sink, fastfood, à la carte, and that's one of the reason why I decided to stick with fruit examples in this blog

.

. - The theorem has been extend to optimization and search algorithms.

![]() Resources : D. Wolpert's proof.

Resources : D. Wolpert's proof.