Machine learning glossary

Welcome!

This is a collection of posts about general machine learning concepts that I use as a my personal ML glossary.

What

I will try to summarize important terms and concepts of machine learning. The focus is on intuition but there will also be practical and theoretical notes.

Target Audience, this is for you if you:

- Have a decent understanding of a concept but want more intuition.

-

Knew a term, but want to refresh your knowledge as it's hard to remember everything (that's me

).

). - Need to learn the important concepts in an efficient way. Students cramming for an exam: that's for you

!

!

Why

Having a bad memory but being (at least considering myself to be ![]() ) a philomath who loves machine learning, I developed the habit of taking notes, then summarizing and finally making a cheat sheet for every new ML domain I encounter. There are multiple reasons I want to switch to a web-page:

) a philomath who loves machine learning, I developed the habit of taking notes, then summarizing and finally making a cheat sheet for every new ML domain I encounter. There are multiple reasons I want to switch to a web-page:

- Paper is not practical and prone to loss.

- Thinking that someone I don't know (I'm talking about you

) might read this post makes me write higher quality notes .

) might read this post makes me write higher quality notes . - I'm forever grateful to people that spend time on forums and open source projects. I now want to give back to the community (The contribution isn't comparable, but I have to start somewhere

).

). - Taking notes on a computer is a necessary step for my migration to CS

.

. - As a wise man once said:

You do not really understand something unless you can explain it to your grandmother. - Albert Einstein

My grandma's are awesome but not really into ML (yet). You have thus been designated "volunteer" to temporarily replace them.

but not really into ML (yet). You have thus been designated "volunteer" to temporarily replace them.

Keeping a uniform notation in machine learning is not easy as many sub-domains use different notations due to historical reasons. I will try using a uniform notation for all the glossary: To make it easier to search the relevant information in the Glossary here is the color coding I will be using:Notation

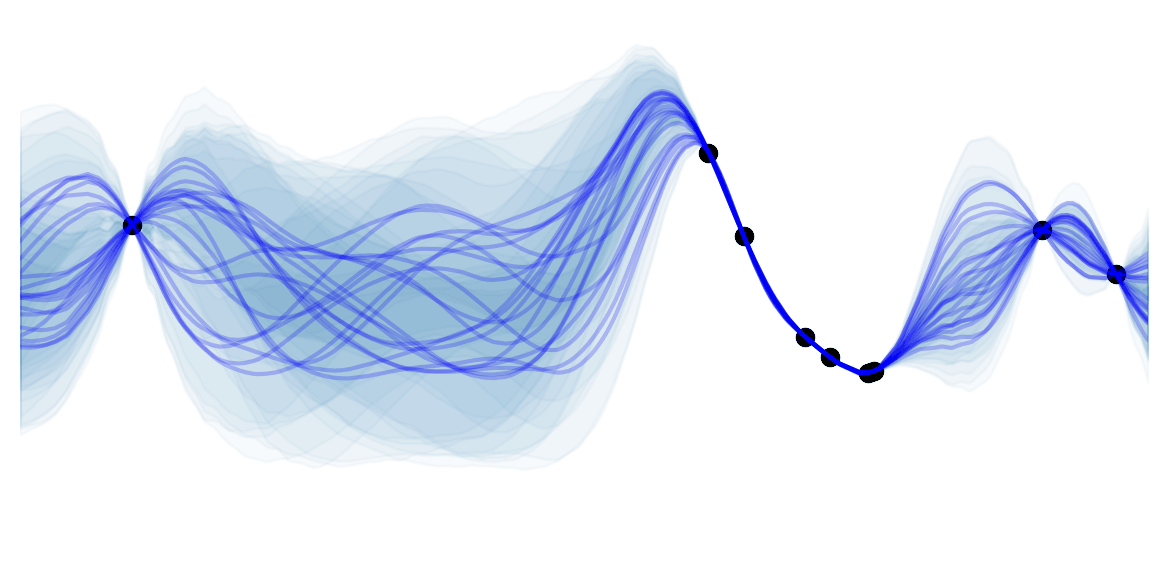

![]() Intuition

Intuition

![]() Practical

Practical

![]() Disadvantage

Disadvantage

![]() Advantage

Advantage

![]() Example

Example

![]() Side Notes

Side Notes

![]() Compare to

Compare to

![]() Resources

Resources

Disclaimer:

- This is my first post ever :bowtie:, I would love to get your feedback.

- I'm bad at spelling: Apologies in advance (feel free to correct me).

- Check out the resources from where I got most of this information.

- ML sub-domains overlap A LOT. I will try not to make the separations too artificial. Any suggestions would be appreciated

. Note that I separate domains both by learning style and by algorithm similarity.

. Note that I separate domains both by learning style and by algorithm similarity. - Many parts are still missing, come back soon for those.

Enough talking: prepare your popcorn and let us get going ![]() !

!